Robot Setup

Bring up the robot and position it at the edge of a table, facing the table. When manipulating objects on tables, the workspace of the arms is generally increased by raising the robot torso all the way up.

Place an object on the table, within reach of the robot, and point the head of the robot so that the narrow_stereo point cloud contains part of the table as well as the object. Also make sure that the arms of the robot are off to the side, so that they do not obstruct the robot's view of the table.

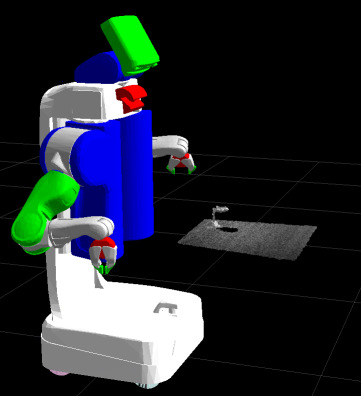

When you are done, the view of the robot and the narrow_stereo point cloud in rviz could look like this:

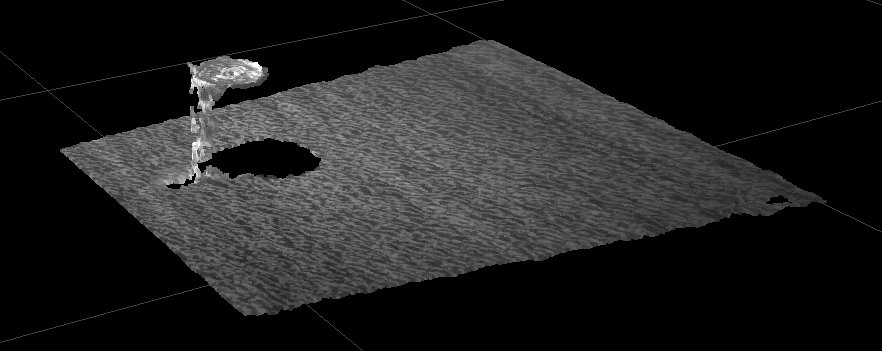

The point cloud from the narrow_stereo should look like this:

For a list of useful rviz markers to be monitored while running the manipulation pipeline see the Troubleshooting page.

Starting the Package

We will create a new package for our application, called pr2_pick_and_place_tutorial. This tutorial depends on four other packages, listed in the package creation command.

roscreate-pkg pr2_pick_and_place_tutorial actionlib object_manipulation_msgs tabletop_object_detector tabletop_collision_map_processing

Writing the Code

We will be adding all the code below in a single source file, in the main function. First we will add the necessary #include directives and a stub for the main function.

1 #include <ros/ros.h>

2

3 #include <actionlib/client/simple_action_client.h>

4 #include <tabletop_object_detector/TabletopDetection.h>

5 #include <tabletop_collision_map_processing/TabletopCollisionMapProcessing.h>

6 #include <object_manipulation_msgs/PickupAction.h>

7 #include <object_manipulation_msgs/PlaceAction.h>

8

9 int main(int argc, char **argv)

10 {

11 }

All the code snippets below then get added, in order, to the main function. At the end of this tutorial you will find a complete snapshot of the source file with all the functionality added in.

Initialization of ROS Service and Action Clients

We will first initialize our ROS node and create the service and action clients we need. We need four clients:

- a service client for object detection

- a service client for the service that takes the detection result and integrated it in the collision environment.

- an action client for the Pickup action

- an action client for the Place action

We initialize all these clients like this:

1 //initialize the ROS node

2 ros::init(argc, argv, "pick_and_place_app");

3 ros::NodeHandle nh;

4

5 //set service and action names

6 const std::string OBJECT_DETECTION_SERVICE_NAME =

7 "/object_detection";

8 const std::string COLLISION_PROCESSING_SERVICE_NAME =

9 "/tabletop_collision_map_processing/tabletop_collision_map_processing";

10 const std::string PICKUP_ACTION_NAME =

11 "/object_manipulator/object_manipulator_pickup";

12 const std::string PLACE_ACTION_NAME =

13 "/object_manipulator/object_manipulator_place";

14

15 //create service and action clients

16 ros::ServiceClient object_detection_srv;

17 ros::ServiceClient collision_processing_srv;

18 actionlib::SimpleActionClient<object_manipulation_msgs::PickupAction>

19 pickup_client(PICKUP_ACTION_NAME, true);

20 actionlib::SimpleActionClient<object_manipulation_msgs::PlaceAction>

21 place_client(PLACE_ACTION_NAME, true);

22

23 //wait for detection client

24 while ( !ros::service::waitForService(OBJECT_DETECTION_SERVICE_NAME,

25 ros::Duration(2.0)) && nh.ok() )

26 {

27 ROS_INFO("Waiting for object detection service to come up");

28 }

29 if (!nh.ok()) exit(0);

30 object_detection_srv =

31 nh.serviceClient<tabletop_object_detector::TabletopDetection>

32 (OBJECT_DETECTION_SERVICE_NAME, true);

33

34 //wait for collision map processing client

35 while ( !ros::service::waitForService(COLLISION_PROCESSING_SERVICE_NAME,

36 ros::Duration(2.0)) && nh.ok() )

37 {

38 ROS_INFO("Waiting for collision processing service to come up");

39 }

40 if (!nh.ok()) exit(0);

41 collision_processing_srv =

42 nh.serviceClient

43 <tabletop_collision_map_processing::TabletopCollisionMapProcessing>

44 (COLLISION_PROCESSING_SERVICE_NAME, true);

45

46 //wait for pickup client

47 while(!pickup_client.waitForServer(ros::Duration(2.0)) && nh.ok())

48 {

49 ROS_INFO_STREAM("Waiting for action client " << PICKUP_ACTION_NAME);

50 }

51 if (!nh.ok()) exit(0);

52

53 //wait for place client

54 while(!place_client.waitForServer(ros::Duration(2.0)) && nh.ok())

55 {

56 ROS_INFO_STREAM("Waiting for action client " << PLACE_ACTION_NAME);

57 }

58 if (!nh.ok()) exit(0);

Object Detection

We will now call the tabletop_object_detector, using the TabletopDetection service:

1 //call the tabletop detection

2 ROS_INFO("Calling tabletop detector");

3 tabletop_object_detector::TabletopDetection detection_call;

4 //we want recognized database objects returned

5 //set this to false if you are using the pipeline without the database

6 detection_call.request.return_clusters = true;

7 //we want the individual object point clouds returned as well

8 detection_call.request.return_models = true;

9 if (!object_detection_srv.call(detection_call))

10 {

11 ROS_ERROR("Tabletop detection service failed");

12 return -1;

13 }

14 if (detection_call.response.detection.result !=

15 detection_call.response.detection.SUCCESS)

16 {

17 ROS_ERROR("Tabletop detection returned error code %d",

18 detection_call.response.detection.result);

19 return -1;

20 }

21 if (detection_call.response.detection.clusters.empty() &&

22 detection_call.response.detection.models.empty() )

23 {

24 ROS_ERROR("The tabletop detector detected the table, "

25 "but found no objects");

26 return -1;

27 }

Note that we are asking for both recognized database objects and individual object point clouds for both known and unknown objects to be returned. If you have started the manipulation pipeline without using the database, simply set detection_call.request.return_models = false; in this service call. Objects will still be returned as unrecognized point clouds.

Collision Map Processing

Once we have the detection results, we will send them to the tabletop_collision_map_processing node. Here they will be added to the collision environment, and receive collision names that we can use to refer to them later. Note that the collision map processing service takes as part of the input the result of the detection service we just called.

1 //call collision map processing

2 ROS_INFO("Calling collision map processing");

3 tabletop_collision_map_processing::TabletopCollisionMapProcessing

4 processing_call;

5 //pass the result of the tabletop detection

6 processing_call.request.detection_result =

7 detection_call.response.detection;

8 //ask for the exising map and collision models to be reset

9 processing_call.request.reset_static_map = true;

10 processing_call.request.reset_collision_models = true;

11 processing_call.request.reset_attached_models = true;

12 //ask for a new static collision map to be taken with the laser

13 //after the new models are added to the environment

14 processing_call.request.take_static_collision_map = true;

15 //ask for the results to be returned in base link frame

16 processing_call.request.desired_frame = "base_link";

17 if (!collision_processing_srv.call(processing_call))

18 {

19 ROS_ERROR("Collision map processing service failed");

20 return -1;

21 }

22 //the collision map processor returns instances of graspable objects

23 if (processing_call.response.graspable_objects.empty())

24 {

25 ROS_ERROR("Collision map processing returned no graspable objects");

26 return -1;

27 }

Note that we request any previous information about objects in the collision environment be reset before the new objects are added. We also ask for a new static collision map to be acquired with the tilting laser after our new objects are added to the environment. This will ensure both that our target objects are in the collision environment and that any other obstacles are captured by the collision map.

The TabletopCollisionMapProcessing service will return a list of GraspableObjects, which can be directly sent to a pickup action request, as shown below.

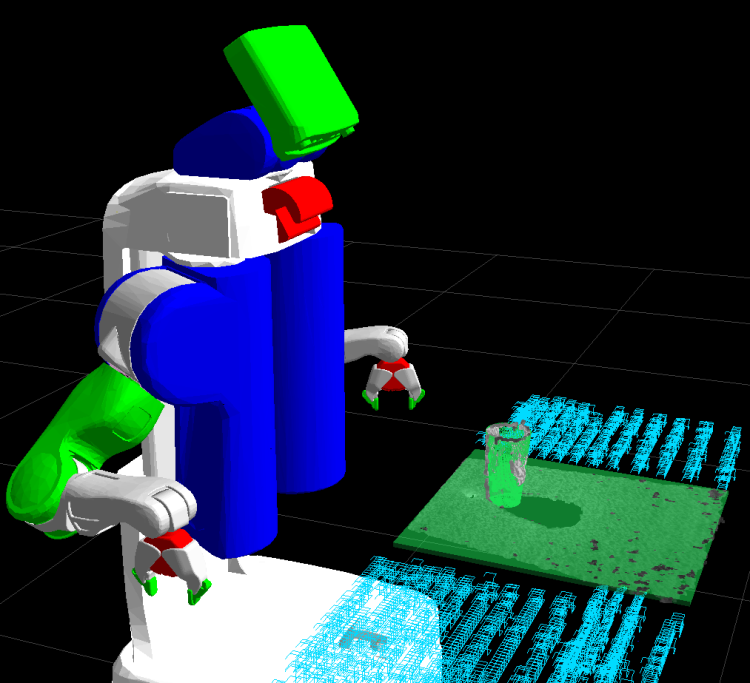

After the collision processing call goes through, you can inspect its result in rviz. Enable Markers on the following topics:

/collision_model_markers/environment_server_right_arm - these markers will show, the results of the tabletop object detection added to the collision environment. Note the table added as a thin box. Database-recognized objects get added as triangle meshes, while unrecognized objects get added to the collision environment as bounding boxes.

/collision_rebroadcast_/environment_server_right_arm - these markers will show the collision map, which is the part of the environment seen by the tilting laser but for which we have no addition semantic information.

Here is an example of what your rviz image could look like, with the tabletop detection results shown in green and the collision map show in blue. Additional details and examples can be found in the tabletop_collision_map_processing package.

Object Pickup

We will now request a pickup of the first object in the list of GraspableObjects returned by the collision map processing service:

1 //call object pickup

2 ROS_INFO("Calling the pickup action");

3 object_manipulation_msgs::PickupGoal pickup_goal;

4 //pass one of the graspable objects returned

5 //by the collision map processor

6 pickup_goal.target = processing_call.response.graspable_objects.at(0);

7 //pass the name that the object has in the collision environment

8 //this name was also returned by the collision map processor

9 pickup_goal.collision_object_name =

10 processing_call.response.collision_object_names.at(0);

11 //pass the collision name of the table, also returned by the collision

12 //map processor

13 pickup_goal.collision_support_surface_name =

14 processing_call.response.collision_support_surface_name;

15 //pick up the object with the right arm

16 pickup_goal.arm_name = "right_arm";

17 //specify the desired distance between pre-grasp and final grasp

18 pickup_goal.desired_approach_distance = 0.1;

19 pickup_goal.min_approach_distance = 0.05;

20 //we will be lifting the object along the "vertical" direction

21 //which is along the z axis in the base_link frame

22 geometry_msgs::Vector3Stamped direction;

23 direction.header.stamp = ros::Time::now();

24 direction.header.frame_id = "base_link";

25 direction.vector.x = 0;

26 direction.vector.y = 0;

27 direction.vector.z = 1;

28 pickup_goal.lift.direction = direction;

29 //request a vertical lift of 10cm after grasping the object

30 pickup_goal.lift.desired_distance = 0.1;

31 pickup_goal.lift.min_distance = 0.05;

32 //do not use tactile-based grasping or tactile-based lift

33 pickup_goal.use_reactive_lift = false;

34 pickup_goal.use_reactive_execution = false;

35 //send the goal

36 pickup_client.sendGoal(pickup_goal);

37 while (!pickup_client.waitForResult(ros::Duration(10.0)))

38 {

39 ROS_INFO("Waiting for the pickup action...");

40 }

41 object_manipulation_msgs::PickupResult pickup_result =

42 *(pickup_client.getResult());

43 if (pickup_client.getState() != actionlib::SimpleClientGoalState::SUCCEEDED)

44 {

45 ROS_ERROR("The pickup action has failed with result code %d",

46 pickup_result.manipulation_result.value);

47 return -1;

48 }

Note the following:

- we are passing to the pickup action goal the names that can be used in the collision environment to refer to both our target object and the table it's resting on (we have these names from the previous step). This helps the pickup action reason about when contacts in various parts of the environment are acceptable. If one or both of these names are blank or incorrect, the pickup will still be attempted, but legal grasps might be rejected by the collision environment because they bring either the robot or the grasped object in contact with the environment

we are requesting that the pickup be executed by an arm/hand combination named "right_arm". This name references a group of parameters on the parameter server, which is where the manipulation pipeline will get its information about the characteristics of the hand being used. These parameters are loaded on the server as part of starting the manipulation pipeline. For details on the arm description parameters look in the object_manipulator documentation, while an example parameter file for the PR2 gripper can be found in pr2_object_manipulation_launch.

- a grasp is typically executed by first moving the arm to a "pre-grasp" position, using padding to ensure the robot does not collide with the environment, then going to the grasp position. This ensures that objects are always approached the same way and allows grasps to be optimized for this.

- the direction of the pre-grasp to grasp motion is part of the characteristics of the gripper, and can not be specified in the pickup goal.

the length of the motion between the pre-grasp and the grasp is specified by the pickup_goal.desired_approach_distance and pickup_goal.min_approach_distance parameters. However, due to obstacles in the environment or robot kinematic constraints, the desired trajectory from pre-grasp to grasp might not be feasible. In our example, we are saying that we would like this distance to the 10cm, but are willing to execute grasps for which this distance is as low as 5cm.

you can set the desired_approach_distance to 0 to get rid of this behavior altogether. However, the arm navigation module might refuse to move the arm directly to the grasp location, as it might think that in that position the arm is colliding with the target object.

- after the object is grasped, the pickup action will attempt to lift it to a safe location, out of contact with its support surface, from where the motion planner can be used to move the arm to some other desired position.

the direction of the lift motion is specified in the pickup goal. In this example, we are requesting that the object be lifted along the "vertical" direction, or along the positive z direction in the base_link frame.

- The length of the lift motion is also specified in the pickup goal. Ideally, we would like the object to be lifted by 10cm, but are willing to executed grasps for which only 5cm of lift after grasp are possible

as an alternative, we could request that the object be lifted in the opposite of the grasp approach direction. In this case, the lift direction would be along negative x, and specified in the r_wrist_roll_link frame. This might be useful when vertical lift is not appropriate, like for example retrieving an object from a fridge shelf.

if you prefer that no lift be performed and the pickup action return as soon as the gripper has been closed on the object, set desired_lift_distance to 0. However, it is possible that the arm_navigation code will have difficulty moving the arm out of that position, as it will think that the grasped object is colliding with its support surface.

in this example, we are leaving the desired_grasps field of the pickup goal empty. This will instruct the object_manipulator to call one of its default grasp planner to generate a list of possible grasps for the object. Alternatively, you can specify your own lists of grasps to be attempted by filling in this field; the grasps will be tried in order until one is deemed feasible, given the current collision environment and kinematic constraints.

if you want to specify your own grasps, they must be encapsulated in the Grasp message. This message contains:

- the hand posture (finger joint angles) for the pre-grasp and the grasp

the hand pose relative to the object for the grasp. For an explanation of the pose of the object used for specifying grasps, see the "Object Location" section of this tutorial.

Object Location

Before placing the object, we must decide where we want the object placed. We will create a place location by shifting the pickup location by 10cm. However, it is important to understand the convention about the location of a http://www.ros.org/doc/api/object_manipulation_msgs/html/msg/GraspableObject.html|GraspableObject]] in the world. This is particularly important since this is the pose that grasps for this object are relative to:

for database recognized object, an object pose is specified as part of the DatabaseModelPose field of the GraspableObject message. This pose is considered to be the origin of the object mesh contained in the database. All grasps for this object are relative to this pose.

for unrecognized objects, the object pose is considered to be the origin of the frame that the object point cloud is in (the object point cloud is contained in the cluster member of the GraspableObject message). All grasps for this object are relative to this pose.

Here is how we shift the pickup location by 10cm to obtain our place location:

1 //remember where we picked the object up from

2 geometry_msgs::PoseStamped pickup_location;

3 if (processing_call.response.graspable_objects.at(0).type ==

4 object_manipulation_msgs::GraspableObject::DATABASE_MODEL)

5 {

6 //for database recognized objects, the location of the object

7 //is encapsulated in GraspableObject the message

8 pickup_location =

9 processing_call.response.graspable_objects.at(0).model_pose.pose;

10 }

11 else

12 {

13 //for unrecognized point clouds, the location of the object

14 //is considered to be the origin of the frame that the

15 //cluster is in

16 pickup_location.header =

17 processing_call.response.graspable_objects.at(0).cluster.header;

18 //identity pose

19 pickup_location.pose.orientation.w = 1;

20 }

21 //create a place location, offset by 10 cm from the pickup location

22 geometry_msgs::PoseStamped place_location = pickup_location;

23 place_location.header.stamp = ros::Time::now();

24 place_location.pose.position.x += 0.1;

Object Place

Now that we have our desired place location, let's send it to the Place action:

1 //put the object down

2 ROS_INFO("Calling the place action");

3 object_manipulation_msgs::PlaceGoal place_goal;

4 //place at the prepared location

5 place_goal.place_pose = place_location;

6 //the collision names of both the objects and the table

7 //same as in the pickup action

8 place_goal.collision_object_name =

9 processing_call.response.collision_object_names.at(0);

10 place_goal.collision_support_surface_name =

11 processing_call.response.collision_support_surface_name;

12 //information about which grasp was executed on the object,

13 //returned by the pickup action

14 place_goal.grasp = pickup_result.grasp;

15 //use the right rm to place

16 place_goal.arm_name = "right_arm";

17 //padding used when determining if the requested place location

18 //would bring the object in collision with the environment

19 place_goal.place_padding = 0.02;

20 //how much the gripper should retreat after placing the object

21 place_goal.desired_retreat_distance = 0.1;

22 place_goal.min_retreat_distance = 0.05;

23 //we will be putting down the object along the "vertical" direction

24 //which is along the z axis in the base_link frame

25 direction.header.stamp = ros::Time::now();

26 direction.header.frame_id = "base_link";

27 direction.vector.x = 0;

28 direction.vector.y = 0;

29 direction.vector.z = -1;

30 place_goal.approach.direction = direction;

31 //request a vertical put down motion of 10cm before placing the object

32 place_goal.approach.desired_distance = 0.1;

33 place_goal.approach.min_distance = 0.05;

34 //we are not using tactile based placing

35 place_goal.use_reactive_place = false;

36 //send the goal

37 place_client.sendGoal(place_goal);

38 while (!place_client.waitForResult(ros::Duration(10.0)))

39 {

40 ROS_INFO("Waiting for the place action...");

41 }

42 object_manipulation_msgs::PlaceResult place_result =

43 *(place_client.getResult());

44 if (place_client.getState() !=

45 actionlib::SimpleClientGoalState::SUCCEEDED)

46 {

47 ROS_ERROR("Place failed with error code %d",

48 place_result.manipulation_result.value);

49 return -1;

50 }

Note the following:

- just like in the Pickup action, we supply the collision names of both the grasped object and the support surface. They serve similar roles as in the pickup action.

- mirroring the pickup action, we have an "approach direction", which will be used by the gripper holding the object to approach the drop location, and a "retreat" which will be performed by the gripper after releasing the object. We specify the approach direction in the place request, but the retreat direction is a characteristic of the gripper. We only specify how much we want the gripper to retreat along this direction after releasing the object.

- just like for pickup, we specify desired approach and retreat distances, but those might not be feasible due to obstacles in the environment or kinematic constraints.

in this example, we approach the table by going "down", along negative z in the base_link frame. We ask for the approach motion to be 10cm, but are willing to accept the place location even if only 5cm of approach are possible

- we also ask for the gripper to retreat for 10cm after releasing the object, but are willing to accept the place location if at least 5cm are possible.

the additional parameter place_padding is used to decide if the requested place location brings the object in collision with the environment. The object's collision model is padded by this amount when the check is performed; use a smaller padding to fit objects into smaller spaces, or a larger padding to be safer when avoiding collisions.

Putting It All Together

After assembling the code above into your main function, you should end up with something resembling the complete file included below. Add the appropriate entry in your CMakeLists.txt to build this source file into an executable, then:

- make sure the robot is positioned as described at the beginning of this tutorial

start the manipulation pipeline (see the relevant tutorial here)

- run this executable

The robot should pick up the object, move it by 10cm, then place it back down.

Troubleshooting

You can find a list of useful rviz markers for monitoring pick and place operations on the troubleshooting page.

Next Steps

From here, you should be able to integrate pick and place functionality into your desired application. You can find more details in the individual documentation pages of the manipulation pipeline stacks:

Additional examples in both C++ and Python can be found in the pr2_pick_and_place_demos package.

The complete source code

Here is the complete source code for this tutorial.

1 #include <ros/ros.h>

2

3 #include <actionlib/client/simple_action_client.h>

4 #include <tabletop_object_detector/TabletopDetection.h>

5 #include <tabletop_collision_map_processing/TabletopCollisionMapProcessing.h>

6 #include <object_manipulation_msgs/PickupAction.h>

7 #include <object_manipulation_msgs/PlaceAction.h>

8

9 int main(int argc, char **argv)

10 {

11 //initialize the ROS node

12 ros::init(argc, argv, "pick_and_place_app");

13 ros::NodeHandle nh;

14

15 //set service and action names

16 const std::string OBJECT_DETECTION_SERVICE_NAME = "/object_detection";

17 const std::string COLLISION_PROCESSING_SERVICE_NAME =

18 "/tabletop_collision_map_processing/tabletop_collision_map_processing";

19 const std::string PICKUP_ACTION_NAME =

20 "/object_manipulator/object_manipulator_pickup";

21 const std::string PLACE_ACTION_NAME =

22 "/object_manipulator/object_manipulator_place";

23

24 //create service and action clients

25 ros::ServiceClient object_detection_srv;

26 ros::ServiceClient collision_processing_srv;

27 actionlib::SimpleActionClient<object_manipulation_msgs::PickupAction>

28 pickup_client(PICKUP_ACTION_NAME, true);

29 actionlib::SimpleActionClient<object_manipulation_msgs::PlaceAction>

30 place_client(PLACE_ACTION_NAME, true);

31

32 //wait for detection client

33 while ( !ros::service::waitForService(OBJECT_DETECTION_SERVICE_NAME,

34 ros::Duration(2.0)) && nh.ok() )

35 {

36 ROS_INFO("Waiting for object detection service to come up");

37 }

38 if (!nh.ok()) exit(0);

39 object_detection_srv =

40 nh.serviceClient<tabletop_object_detector::TabletopDetection>

41 (OBJECT_DETECTION_SERVICE_NAME, true);

42

43 //wait for collision map processing client

44 while ( !ros::service::waitForService(COLLISION_PROCESSING_SERVICE_NAME,

45 ros::Duration(2.0)) && nh.ok() )

46 {

47 ROS_INFO("Waiting for collision processing service to come up");

48 }

49 if (!nh.ok()) exit(0);

50 collision_processing_srv =

51 nh.serviceClient

52 <tabletop_collision_map_processing::TabletopCollisionMapProcessing>

53 (COLLISION_PROCESSING_SERVICE_NAME, true);

54

55 //wait for pickup client

56 while(!pickup_client.waitForServer(ros::Duration(2.0)) && nh.ok())

57 {

58 ROS_INFO_STREAM("Waiting for action client " << PICKUP_ACTION_NAME);

59 }

60 if (!nh.ok()) exit(0);

61

62 //wait for place client

63 while(!place_client.waitForServer(ros::Duration(2.0)) && nh.ok())

64 {

65 ROS_INFO_STREAM("Waiting for action client " << PLACE_ACTION_NAME);

66 }

67 if (!nh.ok()) exit(0);

68

69

70

71 //call the tabletop detection

72 ROS_INFO("Calling tabletop detector");

73 tabletop_object_detector::TabletopDetection detection_call;

74 //we want recognized database objects returned

75 //set this to false if you are using the pipeline without the database

76 detection_call.request.return_clusters = true;

77 //we want the individual object point clouds returned as well

78 detection_call.request.return_models = true;

79 if (!object_detection_srv.call(detection_call))

80 {

81 ROS_ERROR("Tabletop detection service failed");

82 return -1;

83 }

84 if (detection_call.response.detection.result !=

85 detection_call.response.detection.SUCCESS)

86 {

87 ROS_ERROR("Tabletop detection returned error code %d",

88 detection_call.response.detection.result);

89 return -1;

90 }

91 if (detection_call.response.detection.clusters.empty() &&

92 detection_call.response.detection.models.empty() )

93 {

94 ROS_ERROR("The tabletop detector detected the table, but found no objects");

95 return -1;

96 }

97

98

99

100 //call collision map processing

101 ROS_INFO("Calling collision map processing");

102 tabletop_collision_map_processing::TabletopCollisionMapProcessing

103 processing_call;

104 //pass the result of the tabletop detection

105 processing_call.request.detection_result = detection_call.response.detection;

106 //ask for the exising map and collision models to be reset

107 processing_call.request.reset_static_map = true;

108 processing_call.request.reset_collision_models = true;

109 processing_call.request.reset_attached_models = true;

110 //ask for a new static collision map to be taken with the laser

111 //after the new models are added to the environment

112 processing_call.request.take_static_collision_map = true;

113 //ask for the results to be returned in base link frame

114 processing_call.request.desired_frame = "base_link";

115 if (!collision_processing_srv.call(processing_call))

116 {

117 ROS_ERROR("Collision map processing service failed");

118 return -1;

119 }

120 //the collision map processor returns instances of graspable objects

121 if (processing_call.response.graspable_objects.empty())

122 {

123 ROS_ERROR("Collision map processing returned no graspable objects");

124 return -1;

125 }

126

127

128 //call object pickup

129 ROS_INFO("Calling the pickup action");

130 object_manipulation_msgs::PickupGoal pickup_goal;

131 //pass one of the graspable objects returned by the collission map processor

132 pickup_goal.target = processing_call.response.graspable_objects.at(0);

133 //pass the name that the object has in the collision environment

134 //this name was also returned by the collision map processor

135 pickup_goal.collision_object_name =

136 processing_call.response.collision_object_names.at(0);

137 //pass the collision name of the table, also returned by the collision

138 //map processor

139 pickup_goal.collision_support_surface_name =

140 processing_call.response.collision_support_surface_name;

141 //pick up the object with the right arm

142 pickup_goal.arm_name = "right_arm";

143 //specify the desired distance between pre-grasp and final grasp

144 pickup_goal.desired_approach_distance = 0.1;

145 pickup_goal.min_approach_distance = 0.05;

146 //we will be lifting the object along the "vertical" direction

147 //which is along the z axis in the base_link frame

148 geometry_msgs::Vector3Stamped direction;

149 direction.header.stamp = ros::Time::now();

150 direction.header.frame_id = "base_link";

151 direction.vector.x = 0;

152 direction.vector.y = 0;

153 direction.vector.z = 1;

154 pickup_goal.lift.direction = direction;

155 //request a vertical lift of 10cm after grasping the object

156 pickup_goal.lift.desired_distance = 0.1;

157 pickup_goal.lift.min_distance = 0.05;

158 //do not use tactile-based grasping or tactile-based lift

159 pickup_goal.use_reactive_lift = false;

160 pickup_goal.use_reactive_execution = false;

161 //send the goal

162 pickup_client.sendGoal(pickup_goal);

163 while (!pickup_client.waitForResult(ros::Duration(10.0)))

164 {

165 ROS_INFO("Waiting for the pickup action...");

166 }

167 object_manipulation_msgs::PickupResult pickup_result =

168 *(pickup_client.getResult());

169 if (pickup_client.getState() != actionlib::SimpleClientGoalState::SUCCEEDED)

170 {

171 ROS_ERROR("The pickup action has failed with result code %d",

172 pickup_result.manipulation_result.value);

173 return -1;

174 }

175

176

177

178 //remember where we picked the object up from

179 geometry_msgs::PoseStamped pickup_location;

180 if (processing_call.response.graspable_objects.at(0).type ==

181 object_manipulation_msgs::GraspableObject::DATABASE_MODEL)

182 {

183 //for database recognized objects, the location of the object

184 //is encapsulated in GraspableObject the message

185 pickup_location =

186 processing_call.response.graspable_objects.at(0).model_pose.pose;

187 }

188 else

189 {

190 //for unrecognized point clouds, the location of the object is considered

191 //to be the origin of the frame that the cluster is in

192 pickup_location.header =

193 processing_call.response.graspable_objects.at(0).cluster.header;

194 //identity pose

195 pickup_location.pose.orientation.w = 1;

196 }

197 //create a place location, offset by 10 cm from the pickup location

198 geometry_msgs::PoseStamped place_location = pickup_location;

199 place_location.header.stamp = ros::Time::now();

200 place_location.pose.position.x += 0.1;

201

202

203

204 //put the object down

205 ROS_INFO("Calling the place action");

206 object_manipulation_msgs::PlaceGoal place_goal;

207 //place at the prepared location

208 place_goal.place_pose = place_location;

209 //the collision names of both the objects and the table

210 //same as in the pickup action

211 place_goal.collision_object_name =

212 processing_call.response.collision_object_names.at(0);

213 place_goal.collision_support_surface_name =

214 processing_call.response.collision_support_surface_name;

215 //information about which grasp was executed on the object, returned by

216 //the pickup action

217 place_goal.grasp = pickup_result.grasp;

218 //use the right rm to place

219 place_goal.arm_name = "right_arm";

220 //padding used when determining if the requested place location

221 //would bring the object in collision with the environment

222 place_goal.place_padding = 0.02;

223 //how much the gripper should retreat after placing the object

224 place_goal.desired_retreat_distance = 0.1;

225 place_goal.min_retreat_distance = 0.05;

226 //we will be putting down the object along the "vertical" direction

227 //which is along the z axis in the base_link frame

228 direction.header.stamp = ros::Time::now();

229 direction.header.frame_id = "base_link";

230 direction.vector.x = 0;

231 direction.vector.y = 0;

232 direction.vector.z = -1;

233 place_goal.approach.direction = direction;

234 //request a vertical put down motion of 10cm before placing the object

235 place_goal.approach.desired_distance = 0.1;

236 place_goal.approach.min_distance = 0.05;

237 //we are not using tactile based placing

238 place_goal.use_reactive_place = false;

239 //send the goal

240 place_client.sendGoal(place_goal);

241 while (!place_client.waitForResult(ros::Duration(10.0)))

242 {

243 ROS_INFO("Waiting for the place action...");

244 }

245 object_manipulation_msgs::PlaceResult place_result =

246 *(place_client.getResult());

247 if (place_client.getState() != actionlib::SimpleClientGoalState::SUCCEEDED)

248 {

249 ROS_ERROR("Place failed with error code %d",

250 place_result.manipulation_result.value);

251 return -1;

252 }

253

254 //success!

255 ROS_INFO("Success! Object moved.");

256 return 0;

257 }